ClickHouse是一种用于在线分析处理的面向列的开源DBMS。 由俄罗斯IT公司Yandex为Yandex.Metrica网站分析服务开发的, 多用于OLAP分析需求. 今天记录下clickhouse-operator的搭建部署.

这里只会分享ck operator的部署流程, 至于它的原理及operator的原理,不会详细说明.

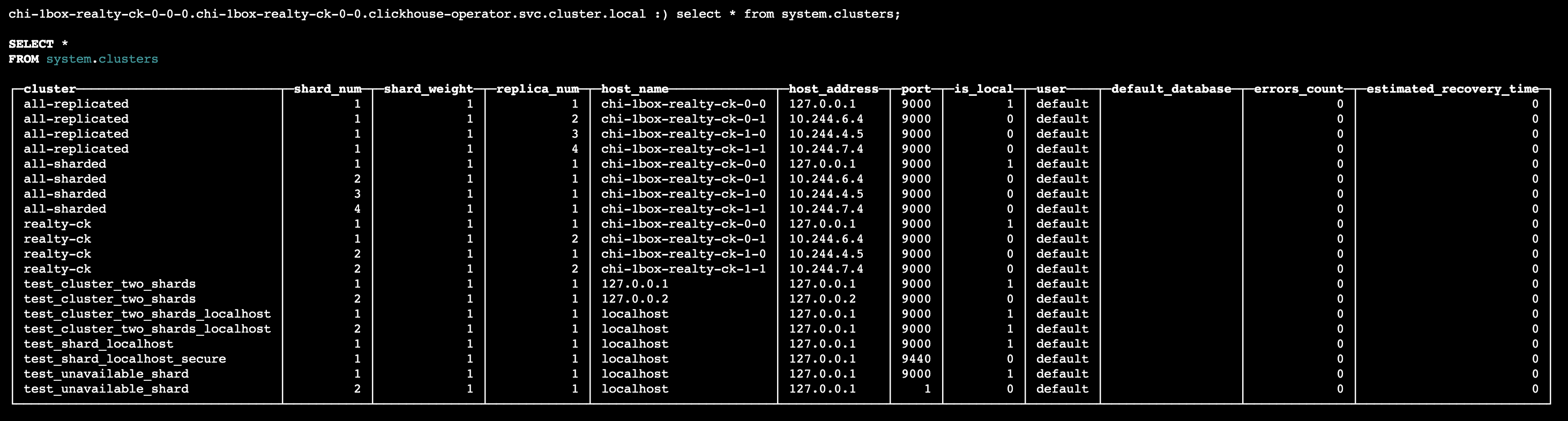

Ck以2*2为例, 使用表复制.

部署zookeeper

由于表复制需要zk的协调, 这里直接使用ck github上的zk容器部署方案, 3节点statefulset,yaml文件大家可参考这里,当然在生产环境下量好使用pv来保障可用性, 由于只是测试环境这里直接使用的是emptyDir.

1

| kubectl -n clickhouse-operator apply -f zk-node-3.yaml

|

node打taints

考虑到ck的资源消耗, 因此需要使用taint来控制其调度

1

2

3

4

|

kubectl taint nodes k8s-node-249 clickhouse=enable:NoExecute

kubectl label nodes kube-node-249 clickhouse=enable

|

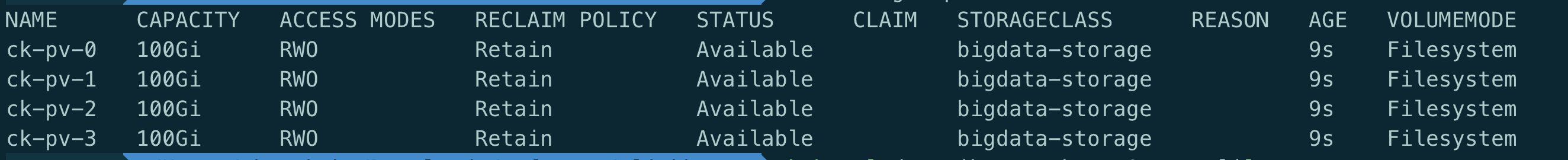

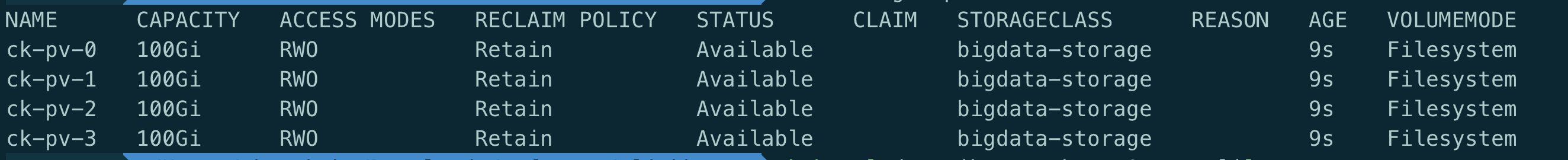

local PV

配置local PV

配置ck使用的存储, 这里没有使用共享存储, 直接使用宿主机的systemFile做为PV

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

| apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: bigdata-storage

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: ck-pv-0

spec:

capacity:

storage: 100Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: bigdata-storage

local:

path: /data/bigdata

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: clickhouse

operator: In

values:

- enable

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: ck-pv-1

spec:

capacity:

storage: 100Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: bigdata-storage

local:

path: /data/bigdata

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: clickhouse

operator: In

values:

- enable

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: ck-pv-2

spec:

capacity:

storage: 100Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: bigdata-storage

local:

path: /data/bigdata

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: clickhouse

operator: In

values:

- enable

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: ck-pv-3

spec:

capacity:

storage: 100Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: bigdata-storage

local:

path: /data/bigdata

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: clickhouse

operator: In

values:

- enable

|

1

| kubectl apply -f pv-pvc.yaml

|

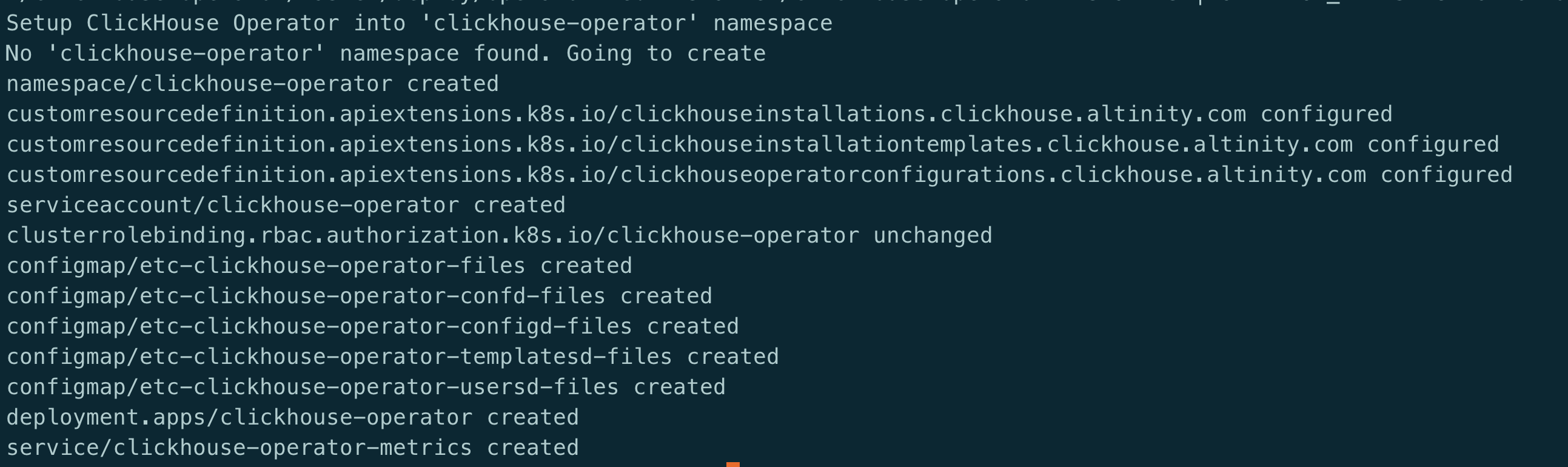

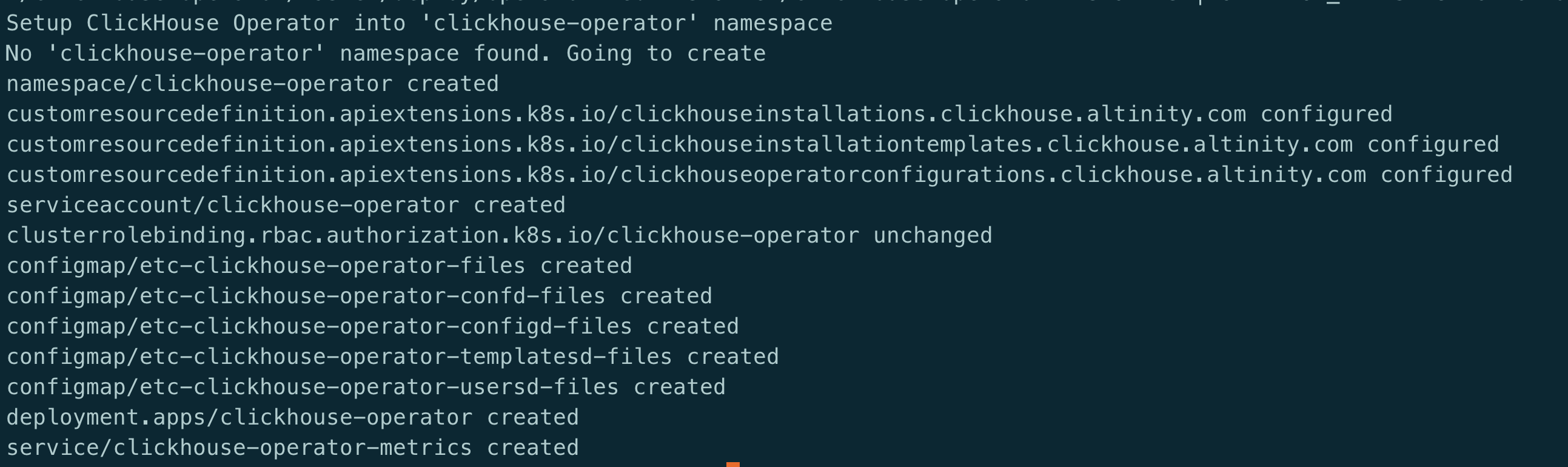

部署operator

使用OPERATOR_NAMESPACE=clickhouse-operator变量指定需要部署的ns,这里为clickhouse-operator

1

| curl -s https://raw.githubusercontent.com/Altinity/clickhouse-operator/master/deploy/operator-web-installer/clickhouse-operator-install.sh| OPERATOR_NAMESPACE=clickhouse-operator bash

|

部署Clickhouse

yaml文件

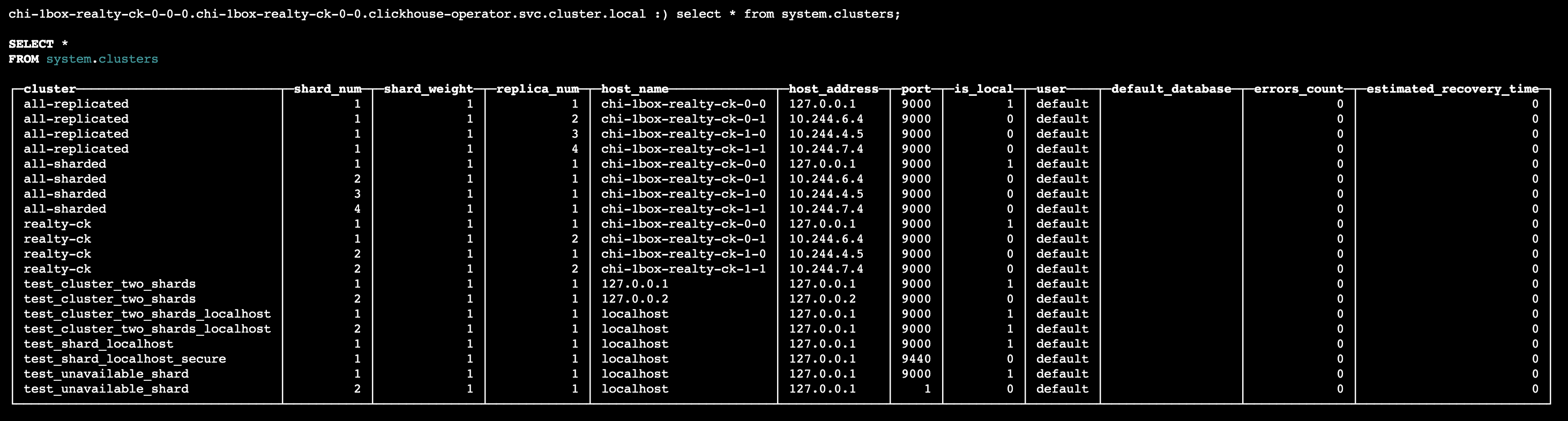

这里以ck 2*2为例子, 即 2shard+ 2replicas

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

| apiVersion: 'clickhouse.altinity.com/v1'

kind: 'ClickHouseInstallation'

metadata:

name: '1box'

spec:

defaults:

templates:

serviceTemplate: service-template

podTemplate: pod-template

dataVolumeClaimTemplate: volume-claim

configuration:

settings:

timezone: "Asia/Shanghai"

zookeeper:

nodes:

- host: zookeeper.clickhouse-operator

port: 2181

clusters:

- name: 'realty-ck'

layout:

shardsCount: 2

replicasCount: 2

templates:

serviceTemplates:

- name: service-template

spec:

ports:

- name: http

port: 8123

- name: tcp

port: 9000

type: ClusterIP

clusterIP: None

podTemplates:

- name: pod-template

spec:

tolerations:

- effect: NoExecute

key: clickhouse

operator: Equal

value: enable

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: 'clickhouse'

operator: In

values:

- 'enable'

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: 'clickhouse.altinity.com/app'

operator: In

values:

- 'chop'

topologyKey: 'kubernetes.io/hostname'

containers:

- name: clickhouse

imagePullPolicy: IfNotPresent

image: yandex/clickhouse-server:latest

ports:

- name: http

containerPort: 8123

- name: client

containerPort: 9000

- name: interserver

containerPort: 9009

volumeMounts:

- name: volume-claim

mountPath: /var/lib/clickhouse

resources:

limits:

memory: '4Gi'

cpu: '2'

requests:

memory: '4Gi'

cpu: '2'

volumeClaimTemplates:

- name: volume-claim

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 100Gi

storageClassName: bigdata-storage

|

说明:

- 由于节点上打了taint, 因此需要使用tolerations

- 由于使用了local PV, 因此使用nodeAffinity来限制pod调度到合适的节点,同时使用podAntiAffinity来限制相同的clickhouse pod不调度到相同节点.

- 可以限制使用的cpu/memory, 以免ck对整个集群造成影响.

1

| kubectl -n clickhouse-operator apply -f ck-2x2-cluster.yaml

|

默认情况下, ck-operator的默认用户名密码为cli, 可通过clickhouse-operator/deploy/operator/clickhouse-operator-install-template.yaml`修改

1

2

3

4

5

6

|

<password_sha256_hex>716b36073a90c6fe1d445ac1af85f4777c5b7a155cea359961826a030513e448</password_sha256_hex>

chUsername: clickhouse_operator

chPassword: clickhouse_operator_password

chPort: 812

|

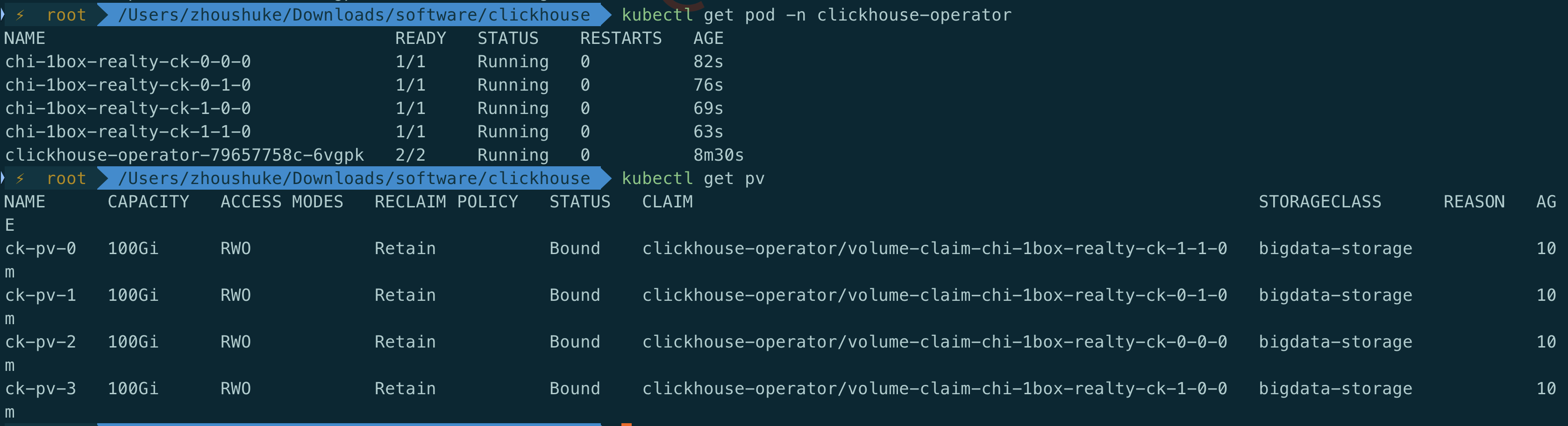

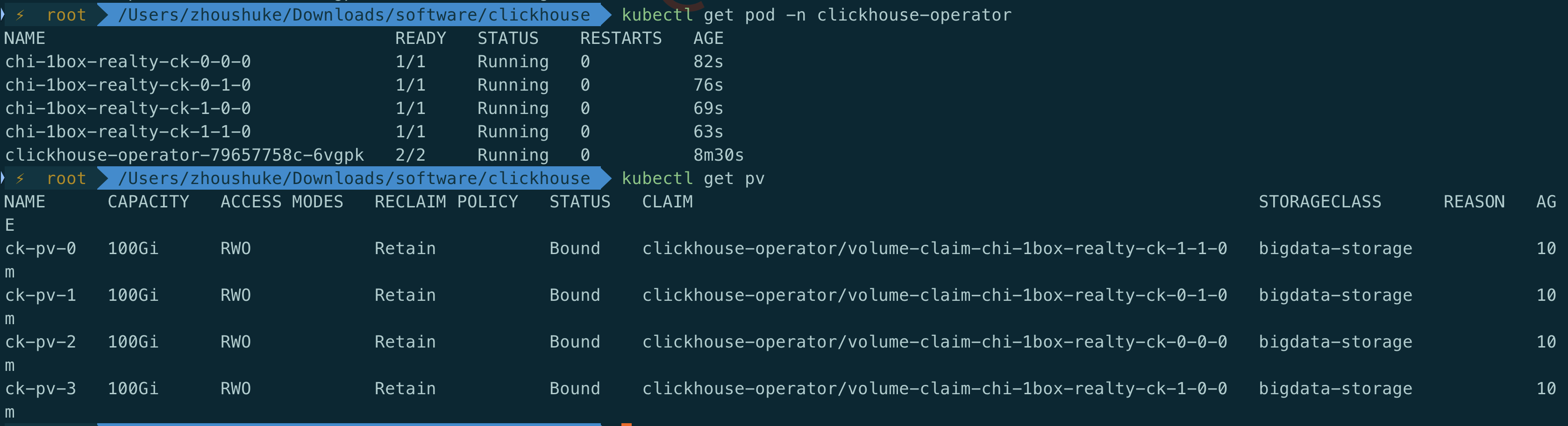

验证pod运行

可以看到, 出现了4个pod刚好对应2*2且pv都由avaliable变成bound状态,说明按照预期的方式调度

创建的pod名称例如chi-{metadata}-{clusterName}-0-0-0等格式

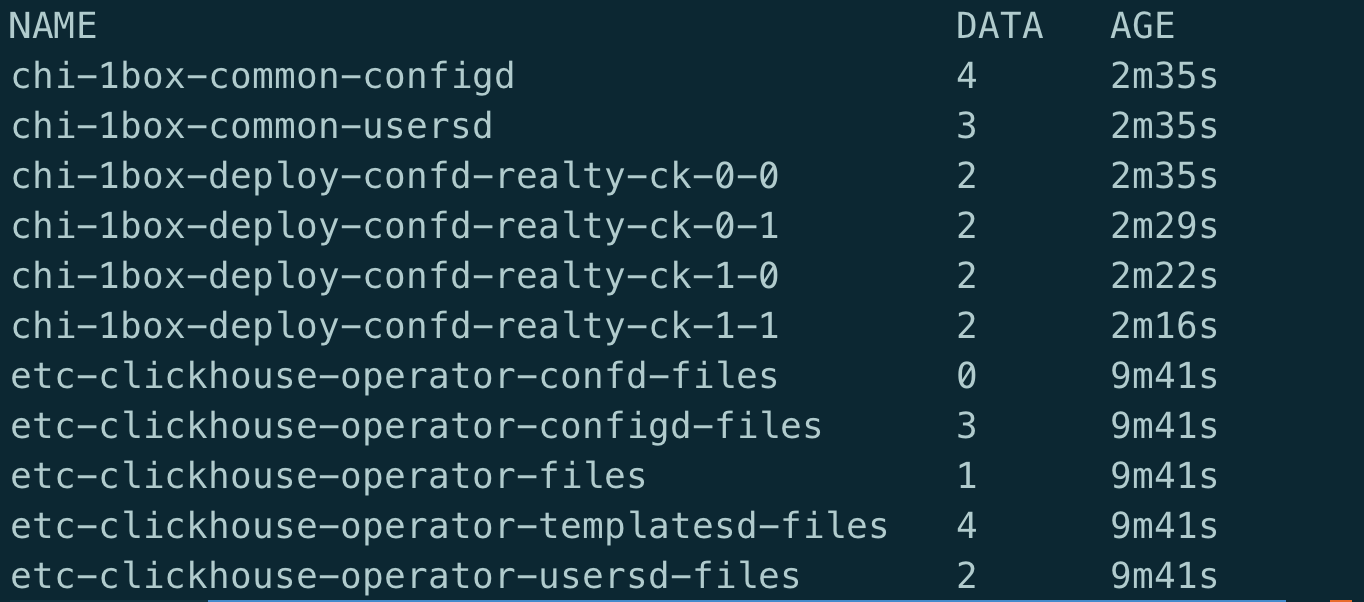

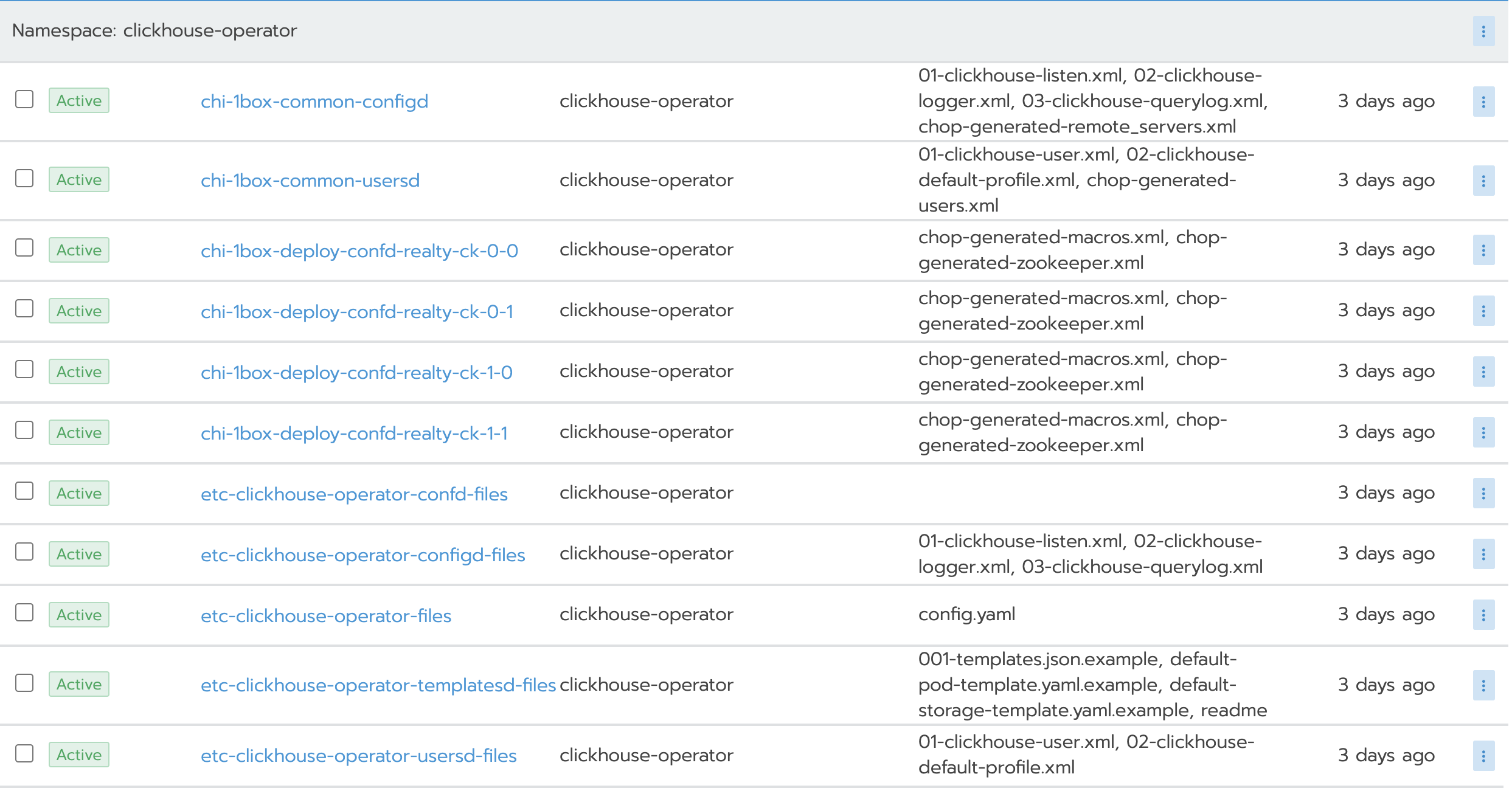

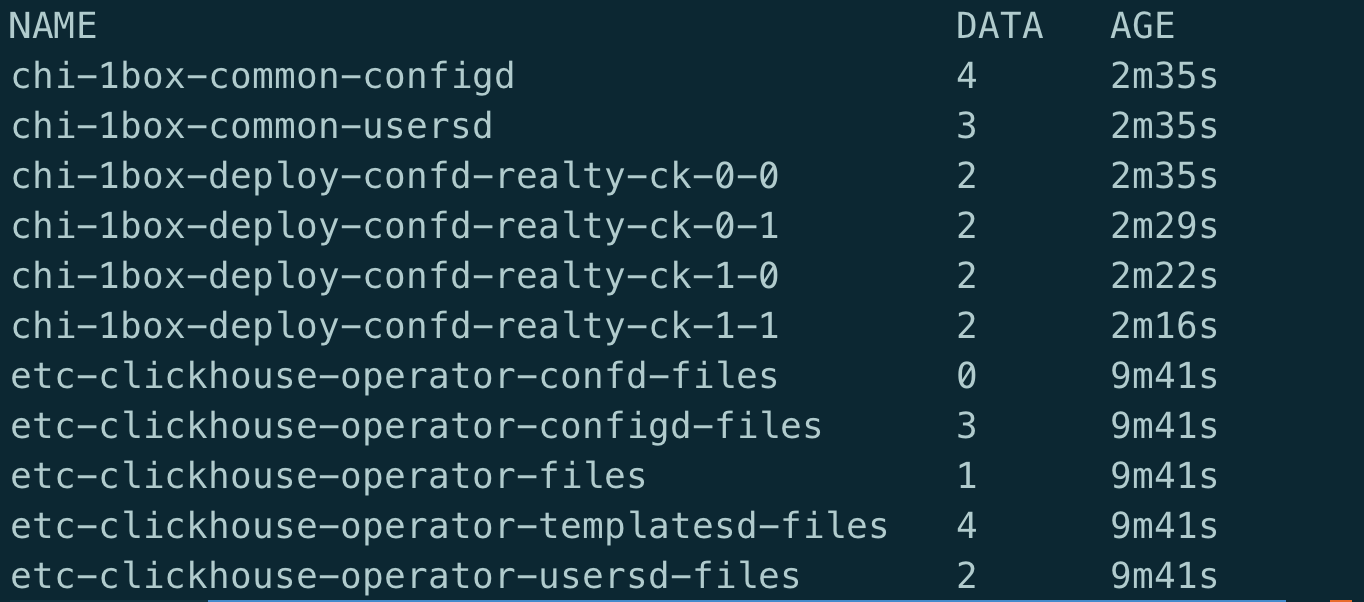

查看configmap

1

| kubectl get cm -n clickhouse-operator

|

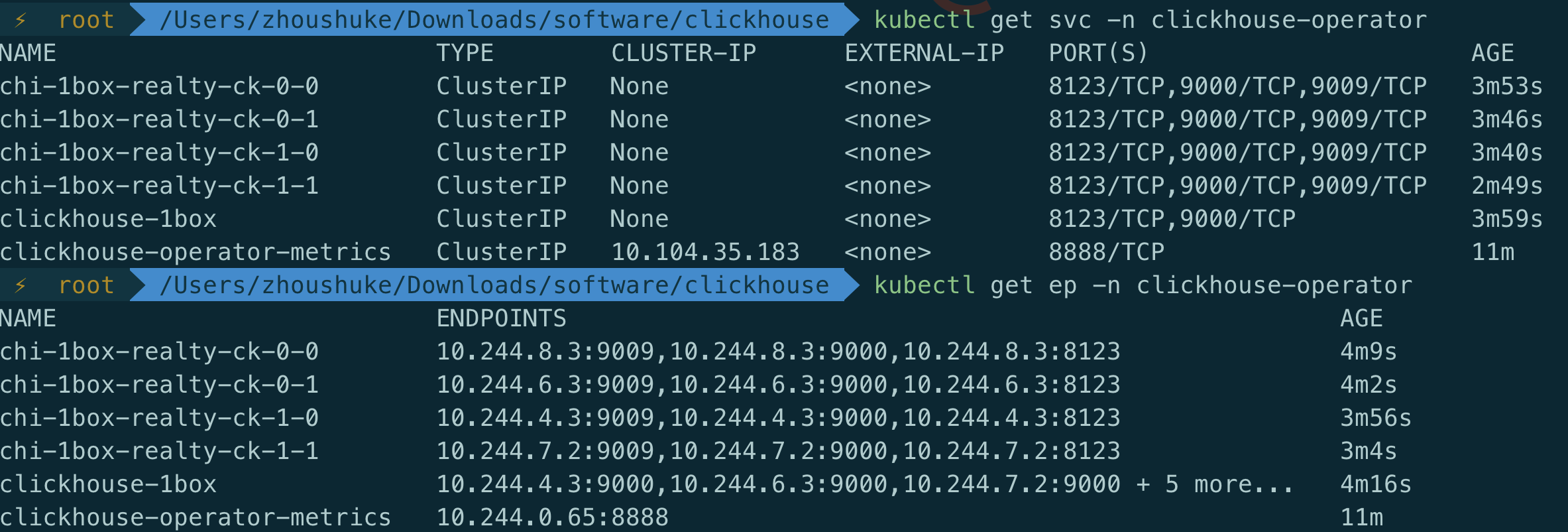

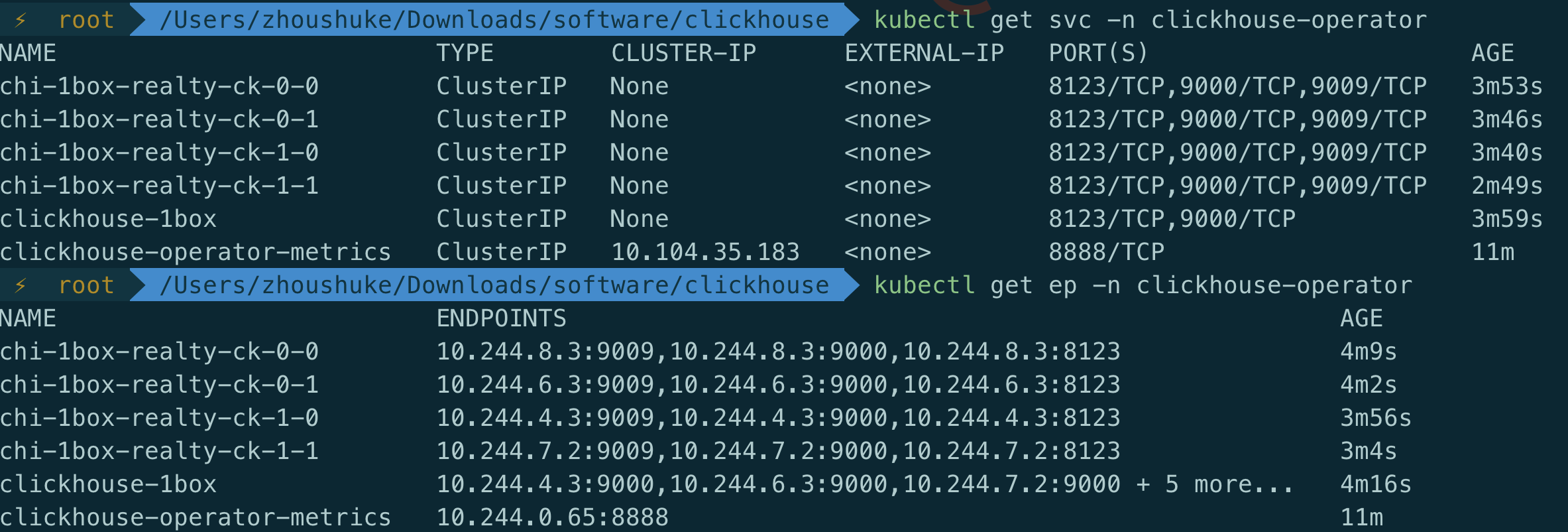

查看svc/ep对象

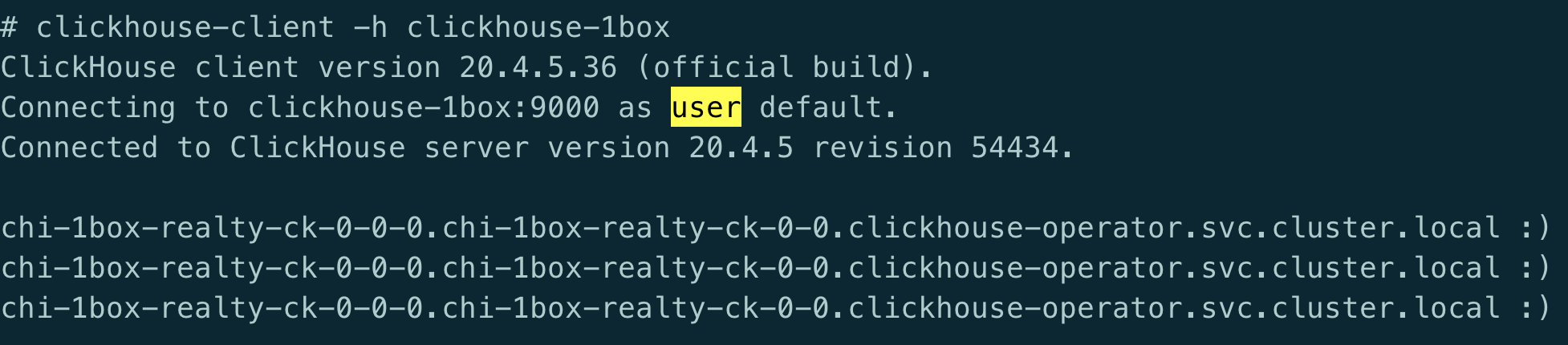

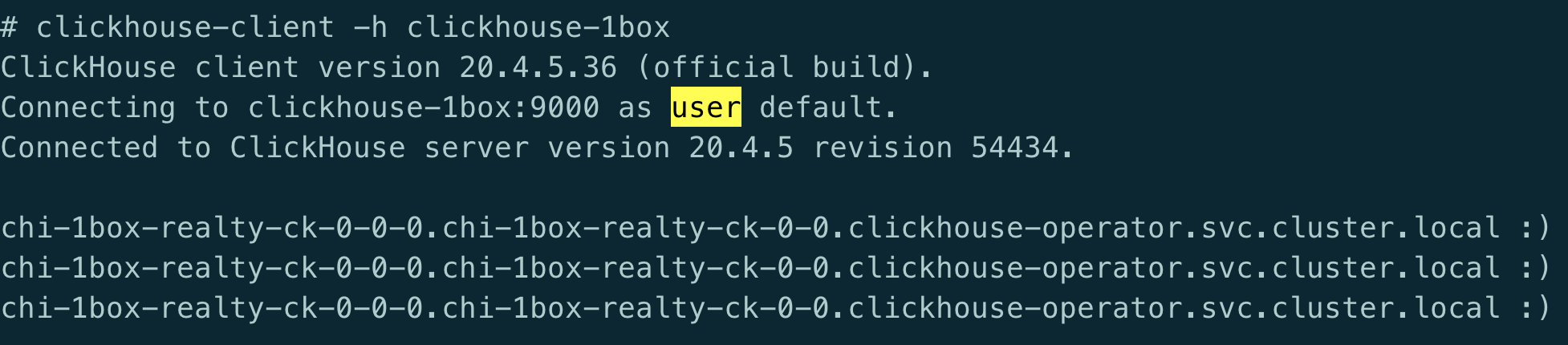

登录集群

最后可以通过clickhouse-1box这个service来登录ck集群

1

| clickhouse-client -h clickhouse-1box --port 9000

|

过程分析

在pv对应的目录下,会发现ck operator生成的目录结构如下:

目录结构

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

| .

├── access

│ ├── quotas.list

│ ├── roles.list

│ ├── row_policies.list

│ ├── settings_profiles.list

│ └── users.list

├── data

│ ├── default

│ └── system

│ ├── metric_log

│ └── trace_log

├── dictionaries_lib

├── flags

├── format_schemas

├── metadata

│ ├── default

│ ├── default.sql

│ └── system

│ ├── metric_log.sql

│ └── trace_log.sql

├── metadata_dropped

├── preprocessed_configs

│ ├── config.xml

│ └── users.xml

├── status

├── tmp

└── user_files

|

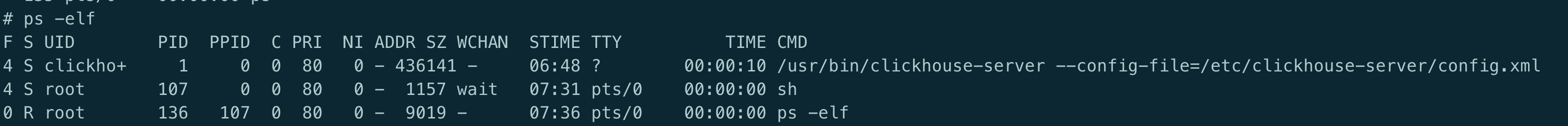

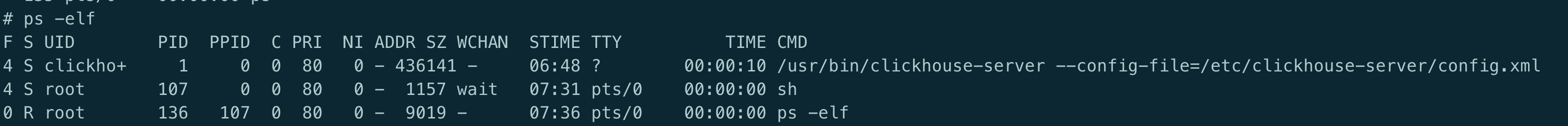

启动进程

clickhouse启动时的主要配置文件为/etc/clickhouse-server/config.xml, 但其它的配置文件如/etc/clickhouse-server/config.d等里面的配置可以覆盖主配置. 参考

容器挂载

看一下pod中挂载情况,

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

|

volumeMounts:

- mountPath: /var/lib/clickhouse

name: volume-claim

- mountPath: /etc/clickhouse-server/config.d/

name: chi-1box-common-configd

- mountPath: /etc/clickhouse-server/users.d/

name: chi-1box-common-usersd

- mountPath: /etc/clickhouse-server/conf.d/

name: chi-1box-deploy-confd-realty-ck-0-0

volumes:

- name: volume-claim

persistentVolumeClaim:

claimName: volume-claim-chi-1box-realty-ck-0-0-0

- configMap:

defaultMode: 420

name: chi-1box-common-configd

name: chi-1box-common-configd

- configMap:

defaultMode: 420

name: chi-1box-common-usersd

name: chi-1box-common-usersd

- configMap:

defaultMode: 420

name: chi-1box-deploy-confd-realty-ck-0-0

name: chi-1box-deploy-confd-realty-ck-0-0

|

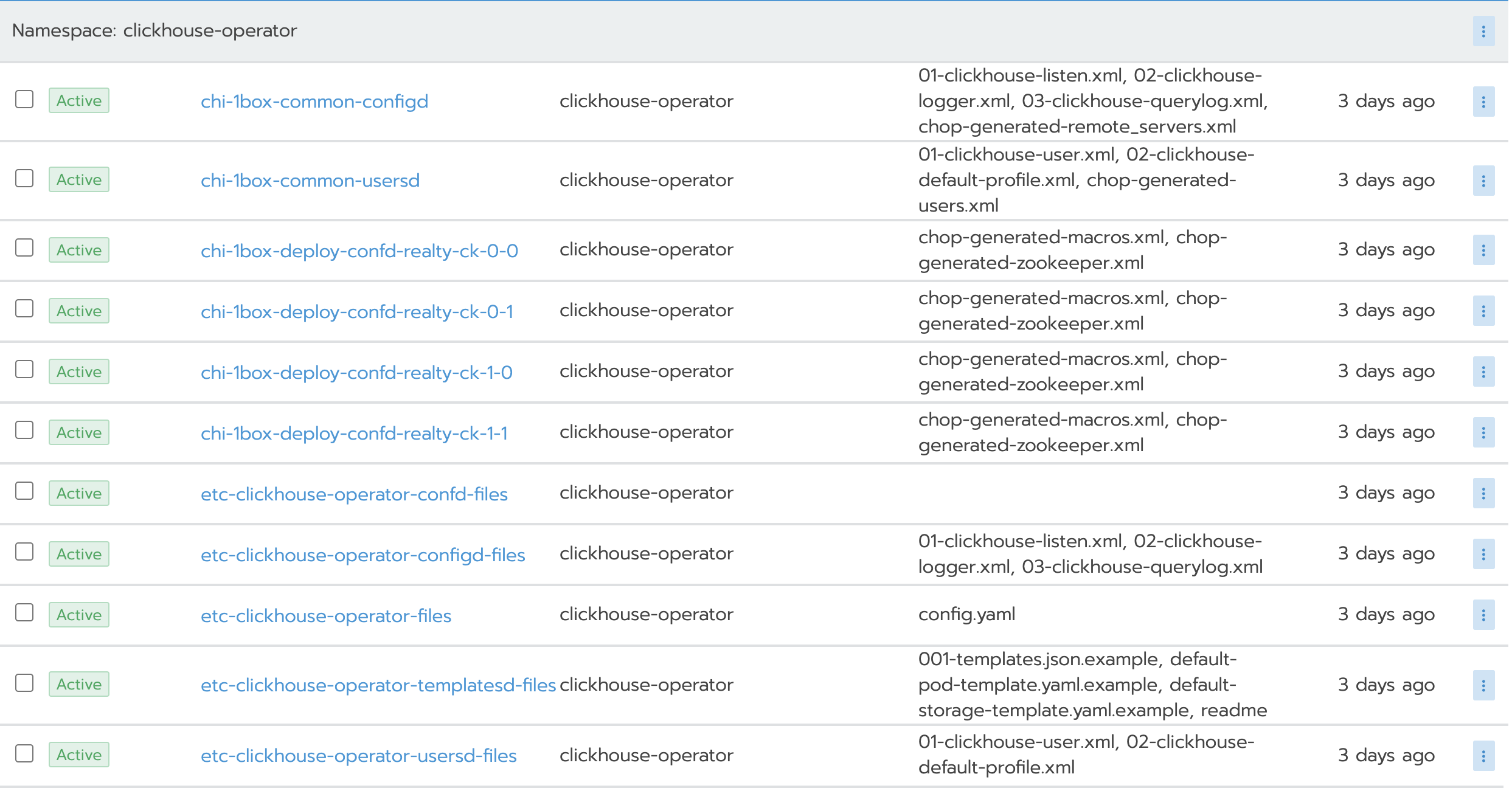

可以发现, 有2个common配置, common配置是4个pod都相同的配置, 也就是跟集群相关的配置,另外每个pod还有特定的配置,每个pod都不相同,如macros.xml

配置文件分析

部署完,生成的operator的配置如下:

其中, 下面几个带operator相关的配置是operator本身的配置, 可以直接用官方的,也可以根据实际情况微调,但并不是所有的配置文件都会用上, 上面的那几个则为clickhouse集群相关的配置, 不需要关注, 这里重点看以下文件.

config.xml

这个是clickhouse启动时指定的主配置文件, 该配置文件可以被config.d下的配置文件覆盖或者合并

config.d

Path to folder where ClickHouse configuration files common for all instances within CHI are located.

集群相关的配置文件, 分片相关的配置就在该目录下

conf.d

Path to folder where ClickHouse configuration files unique for each instance (host) within CHI are located.

每个实际独有的配置, 如实例名

users.d/users.xml

Path to folder where ClickHouse configuration files with users settings are located.

Files are common for all instances within CHI

用户权限相关的配置

配置文件相关的大家可自行查看内容,比较好理解.

客户端登录

如果不写端口,域名中默认端口9000

至此, 整个clickhouse-operator就部署完了, github上docs/chi-example有非常多的例子适用各种场合,非常有参考价值.

后续还有操作比如分片扩容, ck版本升级等操作, github上也有详细的说明.

参考文章: